- AI

- Development

- IoT

The future of software engineering: More devices, more data, smarter experiences

December 8 — 2023

Early in 2023, Apple unveiled the new Vision Pro, their latest innovation in the consumer devices space. This continues a trend set in motion over 15 years ago with the iPhone, from which point we all started carrying around a sensor-filled device that enabled rich experiences. Around the same time, Internet of Things (IoT) devices, driven by cloud computing, became part of our everyday lives. At Mirego, we believe that this trend of new sensor-rich devices producing ever-increasing amounts of data, and thus enabling brand new possibilities and experiences, will only accelerate in the next few years. What are the impacts of this trend? How are we preparing to leverage those new opportunities? Let’s explore the topic.

A broader spectrum of of devices

With computing and networking capacities have drastically improved over the last few years, it has been made possible to build devices that can gather, process, and share larger amounts of data. Expecting that this trend will continue, we can safely assume that we will be able to create better, more immersive real-time experiences that significantly reduces the gap between the digital and physical worlds.

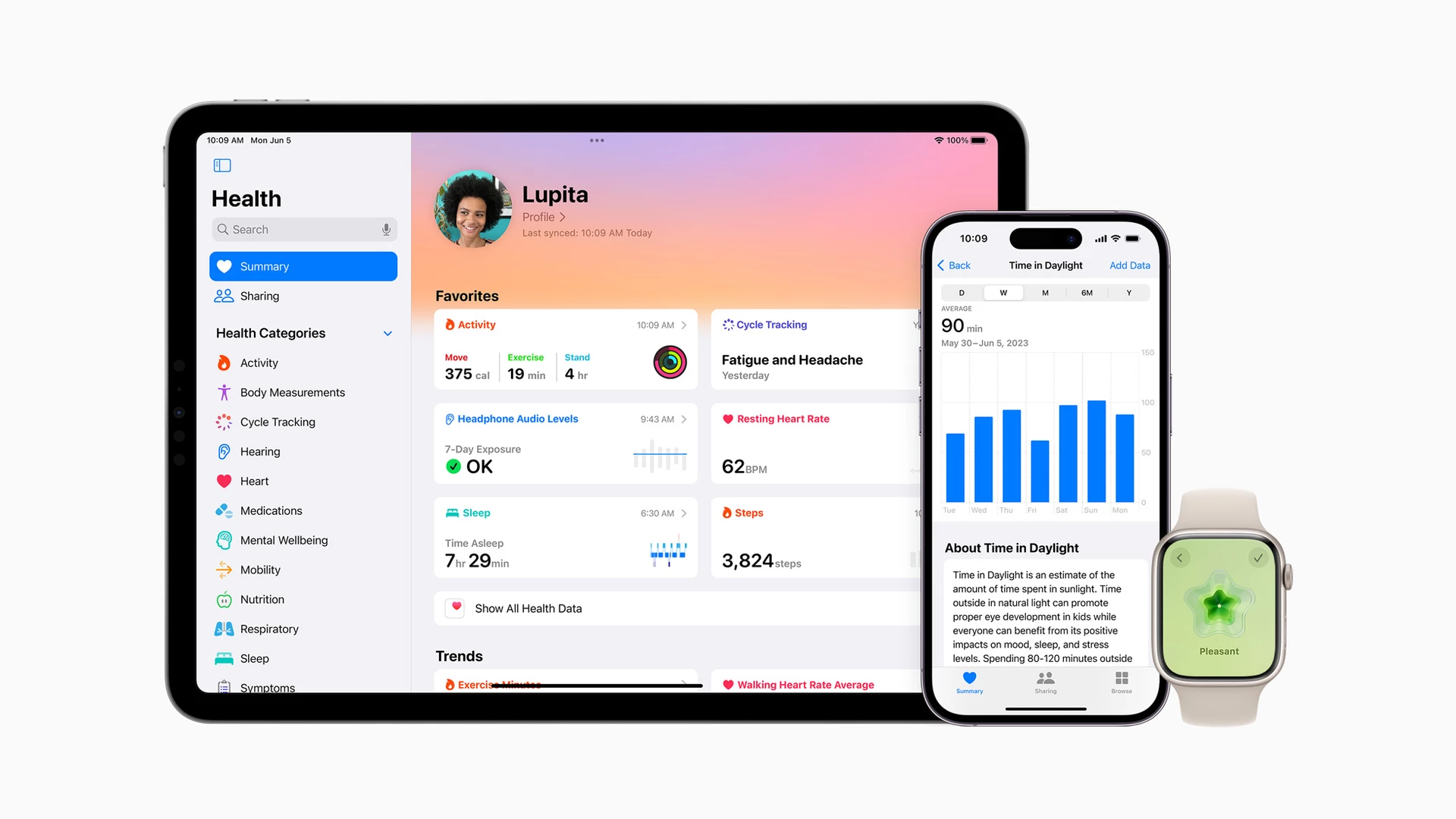

Today, our smartwatch can provide all kinds of health-related information, such as our heart rate, our sleep patterns, and much more. Athletes now train with a plethora of sensors attached to them, enabling fine-grained optimization of their performance and providing advanced analytics to coaches and fans alike.

Apple

New health features in iOS 17, iPadOS 17, and watchOS 10 expand into two impactful areas and provide innovative tools and experiences across platforms.

Who knows what tomorrow will look like? We expect some form of headset to become ubiquitous, and with it, digital products will be able to collect tons of data about our movements and our surroundings. We would also not be surprised to see open protocols emerge collecting data from shared sensors, in real-time. While open data accessible through APIs is commonly used in today’s applications, there are not that many cases where nearby sensors can be seamlessly accessed to enrich applications. Of course, there are numerous security and privacy issues to tackle in order to make such experiences possible, but they should not be insurmountable in the future.

AI will change the way we handle data

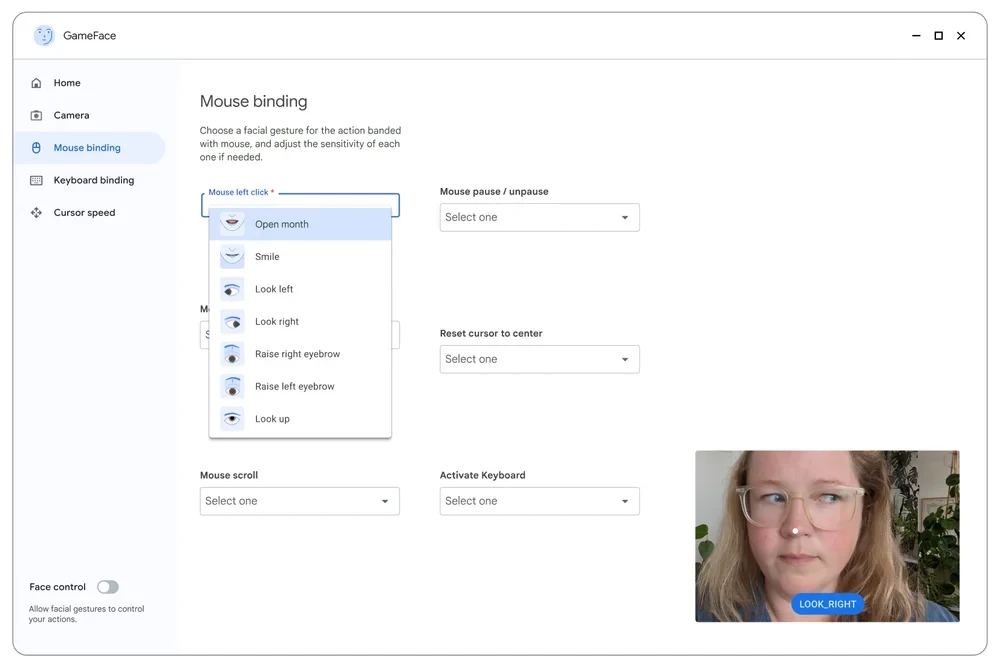

It is impossible to write about the future without mentioning the potential impact of artificial intelligence. In this case, the new devices and the sheer quantity of data harvested will be used to feed machine-learning algorithms, which in turn will enable new capabilities. We expect to see more AI-powered, intent-based features in tomorrow’s applications, where users will state what they want to do, without necessarily knowing how to accomplish the task. A good example of this is voice commands, which are already becoming ubiquitous. Most devices can now be controlled by voice, whether it’s your iOS device with Siri, your Android device with the Google Assistant, your car with CarPlay/Android Auto, your TV or even your gaming console. The next step in seamless command inputs appears to be eye-controlled commands, which will be the primary input for the Vision Pro.

Apple

The revolutionary visionOS features a brand-new three-dimensional interface that users can magically control with their eyes, hands, and voice.

With the vast amounts of data being produced and shared, the strain being put on computing and networking infrastructures could very well become a real issue. This is why processing at the edge will gain (or regain?) popularity. This is already gaining traction, most notably in AI, where lots of effort is being put into running models directly on devices. This can be great for privacy concerns, and it also helps reduce the network load. We expect to see more and more optimization of where processing happens, resulting in less data having to travel back and forth between clients. Most of the processing could be done on the client or even on IoT devices.

Working on what’s next

As developers at Mirego, our goal is to prepare for the future, without risking it all on early assumptions. One of the things we have started thinking about is designing software with the user intent in mind rather than simply thinking about a list of features. How can we enable the user to accomplish a task, without being too specific with how that task will be performed? Will it come from a voice command? Will it come from a chat with an AI assistant? Will it be very specific (I want to watch John Wick 3) or vague (I want to watch a recent action movie with great reviews)? While it is clearly not just an engineering challenge, this is something that developers should keep in mind when architecting their apps. It also has the added benefit of making our apps more accessible, which is something that has become an important concern over the last few years.

Project Gameface, a new open-source AI-powered gaming mouse that enables users to control a computer's cursor using their head movement and facial gestures.

We believe that a lot of the processing will benefit from being done at the edge, for reasons such as performance and privacy. In order to do that successfully, developers need to be very aware of the performance of their code, since edge devices generally have limited computation capabilities. With the advent of powerful servers with almost infinite power and higher-level languages, modern developers often overlook the importance of code optimization. This will not be possible if our goal is to deploy to edge devices with severe limitations.

While we feel that it is important to adapt engineering practices to tomorrow’s reality, no one really knows which emerging technology or device will stick. Encouraging developers to stay curious and try as many new things as possible is crucial. We have labs in our offices where our team members can try new devices or invest in building prototypes and proof of concepts on emerging technologies. While much of that work is unlikely to yield significant results, being ready when a new trend picks up steam is the name of the game: it’s far easier to board a train at the station than when it’s fast-moving toward its destination.

While it’s difficult to predict how the next few years will unfold, there is a clear trend of more devices with more data to process and consume. The trend has only accelerated over the last 15 years and doesn’t show any sign of it slowing down. We see this as a massive opportunity to build new incredible digital products and experiences for our customers. It’s impossible to know for sure which devices will go mainstream, so we’ve adopted a culture of making small bets, throwing a lot of spaghetti at the wall to gain a deep understanding of the underlying technologies and their strengths in order to be ready to dive deep when something gains traction.