- AI

- Blog

Apple Intelligence — Context is the New Location

June 12 — 2024

One of the advantages of smartphones has always been their ability to provide user context more effectively than computers. This capability took a significant leap in 2008 with the iPhone 3G, which introduced GPS alongside improved connectivity. Location-based features became a game-changer, driving the functionality of many apps we rely on today.

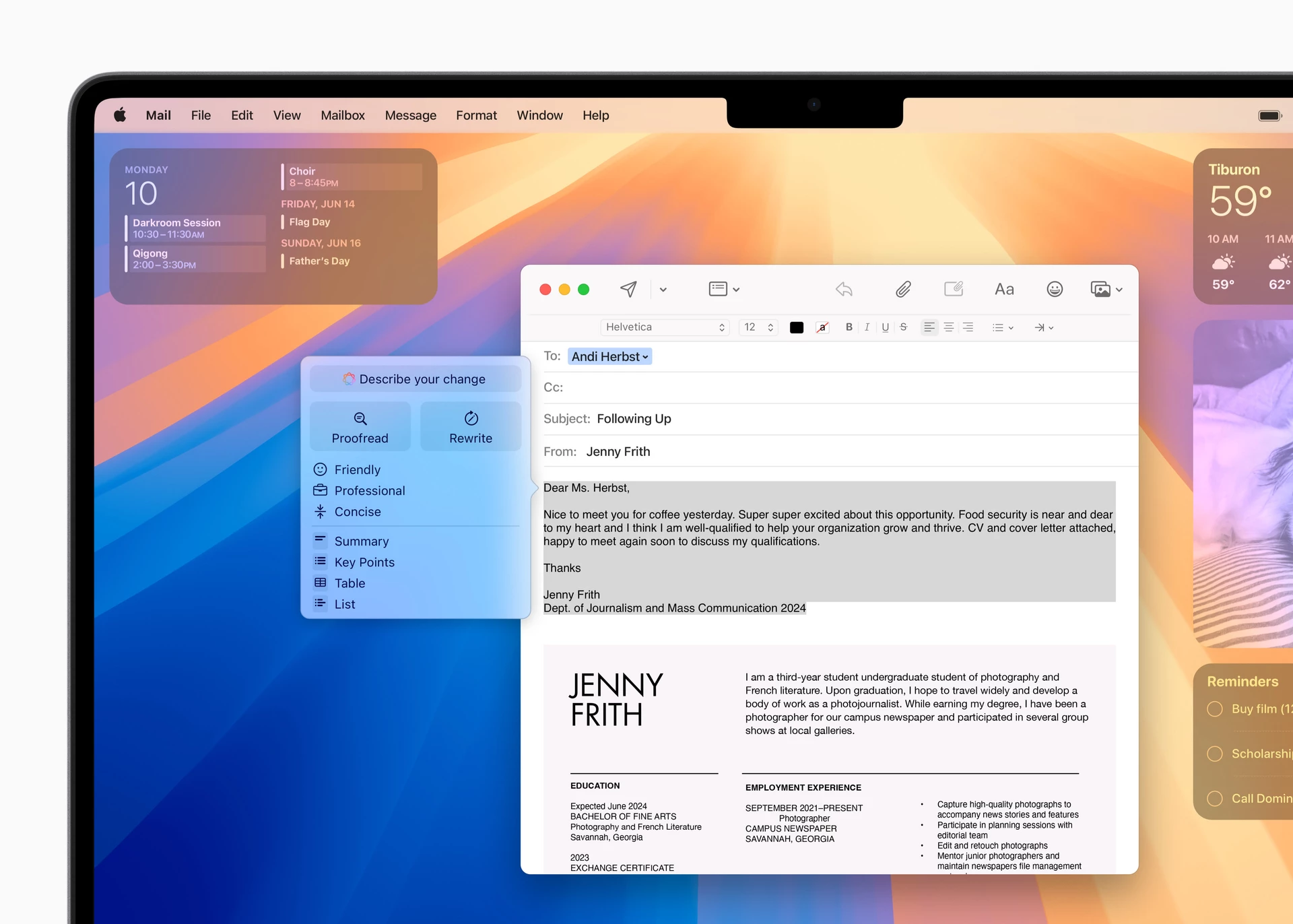

At its latest WWDC, Apple released its first shot at generative artificial intelligence for their iPhone, iPad, and Mac operating systems. The AI, called Apple Intelligence, is integrated directly into the operating system, which means third-party application developers and their users will easily benefit from new capabilities for understanding and creating language such as rewriting, proofreading, and summarizing text. But more importantly, it will give more context for the AI to take action.

The more context AI has, the better the experience.

Devices like the iPhone create a private, local knowledge database that integrates data from all Apple applications, forming a comprehensive and interconnected information network. This data, deeply embedded in the device's ecosystem, is expected to be accessible to developers in the future, unlocking new possibilities for app functionality. Unlike current cloud-based LLMs like GPT, which may lack in-depth user information, Apple Intelligence leverages its intimate knowledge of the user, enabling it to deliver highly personalized and contextually aware responses and actions.

When Apple Intelligence is activated, it seamlessly integrates your current context, such as the active app, your schedule, and background applications. For instance, if a user asks Siri, “When should I leave to pick up my mother?”, Siri already understands who the person is, the user's current location, and that a train ticket was purchased for her from the email receipt in the Mail app. It then only needs to quickly check the current traffic conditions to the train station to provide an accurate response.

The era of the input box is over.

Prior to this WWDC, Generative AI features—such as image creation, task requests, and suggestions—relied heavily on an input box, requiring users to have prompt engineering skills to maximize its potential. However, Apple has demonstrated that this method might not offer the best user experience for most use cases.

By integrating AI deeply within devices, Apple has set a new standard for delivering pre-built experiences that eliminate the need for text input. Voice commands to start recording a memo, altering the tone of an email, creating your own custom emojis, completing handwritten text by pasting content, and generating new images based on a moodboard—all these are seamlessly integrated experiences powered by generative AI, without the need to type a single letter. This perfectly exemplifies the second level of our AI maturity framework, which emphasizes seamlessly integrating existing AI tools into digital products to address specific needs or create an experience for users that almost feels like magic.

Source : Apple - Smart Script makes handwritten notes fluid, flexible, and easier to read, all while maintaining the look and feel of a user’s personal handwriting.

✦

Apple Intelligence will be available to developers in a beta release later this summer. This includes smart writing tools, integrated image generation, and App Intents across various domains, which developers will soon be able to integrate into third-party applications. These advancements unlock a world of new possibilities for integrating artificial intelligence into digital products, promising a wave of innovations in the coming months.